Today, we will continue to use dataset from Chicago Transit Authority.

Data Preparation

To make a sequence data, we use windows. If we want to use past 56 days as a train data, use 56 days-length window to split the past data, and use the next value of each window as a target value.

In keras, tf.keras.utils.timeseries_dataset_from_array() makes tf.data.Dataset which has all windows and targets. The next code makes batches (size 2), with length 3 window and target.

>>> import tensorflow as tf

>>> my_series = [0, 1, 2, 3, 4, 5]

>>> my_dataset = tf.keras.utils.timeseries_dataset_from_array(

my_series,

targets=my_series[3:], # target from 4th element

sequence_length=3,

batch_size=2

)

...

>>> list(my_dataset)

[(<tf.Tensor: shape=(2, 3), dtype=int32, numpy=

array([[0, 1, 2],

[1, 2, 3]], dtype=int32)>,

<tf.Tensor: shape=(2,), dtype=int32, numpy=array([3, 4], dtype=int32)>),

(<tf.Tensor: shape=(1, 3), dtype=int32, numpy=array([[2, 3, 4]], dtype=int32)>,

<tf.Tensor: shape=(1,), dtype=int32, numpy=array([5], dtype=int32)>)]

The windows are [0, 1, 2], [1, 2, 3], [2, 3, 4] and targets are 3, 4, 5. According to the batch size, the last batch contains only one window.

window() method works in a same way.

>>> for window_dataset in tf.data.Dataset.range(6).window(4, shift=1):

... for element in window_dataset:

... print(f'{element}', end=' ')

... print()

...

0 1 2 3

1 2 3 4

2 3 4 5

3 4 5

4 5

5

You can remove the last small window by drop_remainder=True.

However, you cannot directly use overlapped dataset(like above) directly. Convert to flat dataset first, by using flat_map().

>>> dataset = tf.data.Dataset.range(6).window(4, shift=1, drop_remainder=True)

>>> dataset = dataset.flat_map(lambda window_dataset: window_dataset.batch(4))

>>> for window_tensor in dataset:

... print(f'{window_tensor}')

[0 1 2 3]

[1 2 3 4]

[2 3 4 5]

Making a helper function to make windows easily is also possible.

def to_windows(dataset, length):

dataset = dataset.window(length, shift=1, drop_remainder=True)

return dataset.flat_map(lambda window_ds: window_ds.batch(length))

The last step is to split windows into inputs and targets. Use map().

>>> dataset = to_windows(tf.data.Dataset.range(6), 4)

>>> dataset = dataset.map(lambda window: (window[:-1], window[-1]))

>>> list(dataset.batch(2))

[(<tf.Tensor: shape=(2, 3), dtype=int64, numpy=

array([[0, 1, 2],

[1, 2, 3]])>,

<tf.Tensor: shape=(2,), dtype=int64, numpy=array([3, 4])>),

(<tf.Tensor: shape=(1, 3), dtype=int64, numpy=array([[2, 3, 4]])>,

<tf.Tensor: shape=(1,), dtype=int64, numpy=array([5])>)]

Using Linear Model

You can also use linear model for time series.

tf.random.set_seed(42)

model = tf.keras.models.Sequential([

tf.keras.layers.Dense(1, input_shape=[seq_length])

])

early_stopping_cb = tf.keras.callbacks.EarlyStopping(

monitor='val_mae', patience=50, restore_best_weights=True)

opt = tf.keras.optimizers.SGD(learning_rate=0.02, momentum=0.9)

model.compile(loss=tf.keras.losses.Huber(), optimizer=opt, metrics=['mae'])

history = model.fit(train_ds, validation_data=valid_ds, epochs=500, callbacks=[early_stopping_cb])

Simple RNN

Let’s use a simple RNN with only one recurrent layer with one recurrent neuron.

model = tf.keras.models.Sequential([

tf.keras.layers.SimpleRNN(1, input_shape=[None, 1])

])

All layers in keras expect 3D input with

[batch_size, time_step, dimensionality].dimensionalitywill be 1 if single variate, and 2+ if multivariate. When settinginput_shapeparameter, set value withoutbatch_size. Recurrent layer can get any size of input sequence, so setNone.

This simple RNN is not good for two reasons.

- Too small number of parameters

- tanh function: only between -1 and 1

We can use a layer with 32 neurons and a dense layer that the range of the output is not limited.

univar_model = tf.keras.Sequential([

tf.keras.layers.SimpleRNN(32, input_shape=[None, 1]),

tf.keras.layers.Dense(1)

])

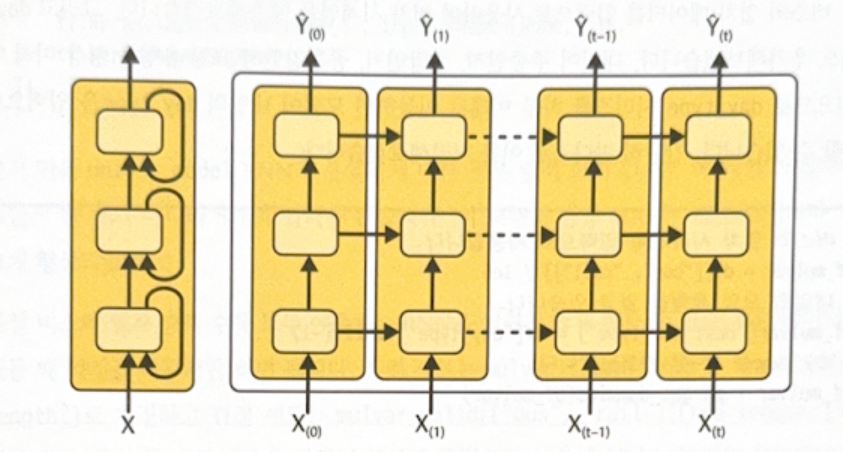

Deep RNN

A deep RNN contains multiple recurrent layers.

deep_model = tf.keras.Sequential([

tf.keras.layers.SimpleRNN(32, return_sequences=True, input_shape=[None, 1]),

tf.keras.layers.SimpleRNN(32, return_sequences=True),

tf.keras.layers.SimpleRNN(32),

tf.keras.layers.Dense(1)

])

Multivariate Time Series

When handling multivariate time series, there are no big difference. Just make a dataset with two variable, and correctly set input_shape of NN.

For codes, check Go for Codes. It uses 5 variables(bus, rail, day type(3 types)) to predict the number of next day rail passengers.

If you want to predict two types of values (e.g. rail & bus passengers), just change target when making a dataset and add a neuron to the last dense layer.

Predicting multiple time steps ahead

There are two ways to predict multiple time steps ahead (e.g. # of passengers for next 14 days).

First method is to use the predicted value as a real value. By repeating this, you can get the sequence of values.

# Method 1

import numpy as np

X = rail_valid.to_numpy()[np.newaxis, :seq_length, np.newaxis]

for step_ahead in range(14):

y_pred_one = univar_model.predict(X) # predict the next day

X = np.concatenate([X, y_pred_one.reshape(1, 1, 1)], axis=1) # predict value is becoming a real value

The second method is to make RNN that predicts next 14 days at once.

# Method 2

def split_inputs_and_targets(mulvar_series, ahead=14, target_col=1):

return mulvar_series[:, :-ahead], mulvar_series[:, :-ahead, target_col]

ahead_train_ds = tf.keras.utils.timeseries_dataset_from_array(

mulvar_train.to_numpy(),

targets=None,

sequence_length=seq_length+14, # next 14 days

batch_size=32,

shuffle=True,

seed=42

).map(split_inputs_and_targets)

ahead_valid_ds = tf.keras.utils.timeseries_dataset_from_array(

mulvar_valid.to_numpy(),

targets=None,

sequence_length=seq_length+14,

batch_size=32

).map(split_inputs_and_targets)

ahead_model = tf.keras.Sequential([

tf.keras.layers.SimpleRNN(32, input_shape=[None, 5]),

tf.keras.layers.Dense(14) # 14 output units

])

You can improve performance by using sequence-to-sequence model.

Sequence-to-Sequence model

The model above predict 14 values only in the last time step (Sequence-to-Vector). To improve the performance you can use a sequence-to-sequence model that predict 14 values for every time steps.

The dataset for this model should have sequences of window for input and window for output. To get this, use window() method twice. The next code will make sequences of 4 windows with length 3.

>>> my_series = tf.data.Dataset.range(7)

>>> dataset = to_windows(to_windows(my_series, 3), 4)

>>> list(dataset)

[<tf.Tensor: shape=(4, 3), dtype=int64, numpy=

array([[0, 1, 2],

[1, 2, 3],

[2, 3, 4],

[3, 4, 5]])>,

<tf.Tensor: shape=(4, 3), dtype=int64, numpy=

array([[1, 2, 3],

[2, 3, 4],

[3, 4, 5],

[4, 5, 6]])>]

After this, use map() to split input and target.

>>> dataset = dataset.map(lambda S: (S[:, 0], S[:, 1:]))

>>> list(dataset)

[(<tf.Tensor: shape=(4,), dtype=int64, numpy=array([0, 1, 2, 3])>,

<tf.Tensor: shape=(4, 2), dtype=int64, numpy=

array([[1, 2],

[2, 3],

[3, 4],

[4, 5]])>),

(<tf.Tensor: shape=(4,), dtype=int64, numpy=array([1, 2, 3, 4])>,

<tf.Tensor: shape=(4, 2), dtype=int64, numpy=

array([[2, 3],

[3, 4],

[4, 5],

[5, 6]])>)]

For example, for input [0, 1, 2, 3], target is [[1, 2], [2, 3], [3, 4],[4, 5]].

Sequence-to-sequence model is similar to the previous model. Just set return_sequences=True.

seq2seq_model = tf.keras.Sequential([

tf.keras.layers.SimpleRNN(32, input_shape=[None, 5], return_sequences=True),

tf.keras.layers.Dense(1)

])

별도의 출처 표시가 있는 이미지를 제외한 모든 이미지는 강의자료에서 발췌하였음을 밝힙니다.

댓글남기기