About this Article

- Authors: Yunjey Choi, Minje Choi, Munyoung Kim, Jung-Woo Ha, Sunghun Kim, Jaegul Choo

- Journal: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

- Year: 2018

- Official Citation: Choi, Y., Choi, M., Kim, M., Ha, J. W., Kim, S., & Choo, J. (2018). StarGAN: Unified Generative Adversarial Networks for Multi-Domain Image-to-Image Translation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 8789-8797).

Accomplishments

- Proposed StarGAN, which is good at multi-domain image transformation by using only single model.

Key Points

1. Terms

- attribute: a meaningful feature inherent in an image (e.g. hair color, gender, age).

- attribute value: a particular value of an attribute (e.g. black hair, male).

- domain: a set of images sharing the same attribute value.

2. Overall Architecture

To train mapping among k domains, previous GAN needs $k(k-1)$ numbers of generators (as a combination). StarGAN solved this problem simply by using both image and domain information as inputs. And then, trained the model to flexibly translate the input into corresponding domain.

Just like original GAN, there are a Generator (G), and a discriminator (D). G generates image(and domain in StarGAN) which is indistinguishable from the real one, and D distinguishes fake images. D produces probability distribution over both images and domain labels, which is denoted as $D:x \rightarrow {D_{src}(x), D_{cls}(x)}$.

3. Objective and Process

There are three objective functions (loss) in StarGAN.

-

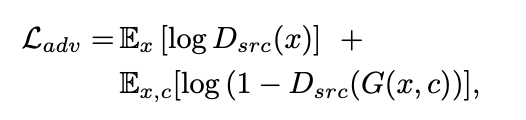

1. Adversarial Loss

Similar to the objective function that of original GAN, except the target domain label c is added.

- $D_{src}(x)$ the probability that D distinguishes an real image x as real.

- $G(x,c)$ an image generated by G with target domain c.

G can only affect the latter term $log(1-D_{src}(G(x,c)))$. $D_{src}(G(x,c))$ goes to 1 when the images from G is hard to distinguish from real. When $D_{src}(G(x,c))$ goes to 1, $log(1-D_{src}(G(x,c)))$ goes to -∞. G will try to minimize it. Minimizing the term means that D is not good at distinguishing real and fake. D will maximize the objective. If D is good at discriminating, the latter term will go to 0.

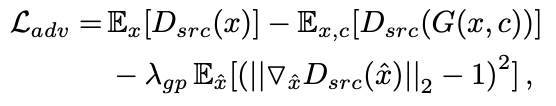

The formula is transformed as a below to stabilize.

-

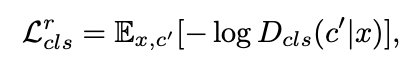

2. Domain Classification Loss

To make the model which is also good at targeting domain, authors added an auxiliary objective which is composed of two terms.

The first one is for D, to make D be good at guessing a desired domain. D should minimize the objective.

- $D_{cls}(c^{\prime}\vert x)$ the probability distribution over original domain labels(c’) computed by D.

The second term is for G, to make G draw an image to fit the ordered domain. G should minimize the objective.

-

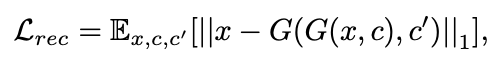

3. Reconstruction Loss

The two loss above does not guarantee G to make an image only targeted domains are changed and the other attributes are preserved. To alleviate the problem, G has one additional objective, which is called a cycle consistency loss.

- Steps:

- Generate image by using G and target domain c.

- Use the generated image of step 1 as another input, and use original domain $c^{\prime}$ a target domain. Generate another an image by using the same G.

- If G can preserve untouched attributes, the generated image of step 2 should be similar to the original image. The, the difference will become small.

- Steps:

-

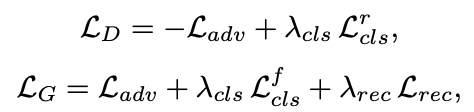

Full objective

$S_{D}$ for D, and ${}_{G}$ for G.

- $\lambda_{cls}$ and $\lambda_{rec}$ are hyper-parameters that can control the relative importance.

4. More About Training

-

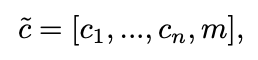

1. Mask Vector

When training on multiple datasets, those datasets can have different attributes. This is a problem because complete information is needed in the objective function. To alleviate this problem, authors used a mask vector m to let StarGAN ignore unspecified labels. The label is represented as:

- $c_{i}$ a binary vector or one-hot vector for attributes of i-th dataset

- m (mask vector): a one-hot vector represents which dataset the image comes from. In this article, authors used two different dataset, so n is 2.

-

2. Training Strategy

During training, D tries to minimize only the classification error associated with known label. By alternating between multiple datasets, D learns all features from all datasets and G learns to control all labels.

Experiments and Insights

1. CelebA Dataset

CelebA dataset is about hair color, gender and age. By qualitatively evaluating, authors said StarGAN is the best. There are two reasons of success.

- Multi-task learning framework

- a kind of regularization effect

- preserved original facial identity

- Using activation maps from CNN layer as latent representation

There were also quantitative evaluations, using a survey (such as ‘which one of four images is the best?’).

StarGAN was especially good at multi-attribute transfer task.

2. RaFD Dataset

RaFD dataset is about facial expressions (e.g. happy, sad). By qualitatively evaluating, authors said StarGAN is the best. The most important reason was implicit data augmentation caused by multi-tasking learning, which allowed StarGAN to properly learn and maintain the quality and sharpness of generated output.

Quantitative evaluation was also conducted, by using a ResNet classifier to classify the facial expressions. Error classification of StarGAN was the lowest. Furthermore, the number of parameters that StarGAN was the least.

3. CelebA + RaFD Dataset

Both dataset were used to learn from multiple datasets. The results of joint StarGAN(StarGAN-JNT) and single dataset (RaFD) StarGAN(StarGAN-SNG) showed that StarGAN-JNT was better.

Then authors changed the mask vector from proper mask (0, 1) to wrong mask (1, 0). As the mask vector were changed, wrong information from vector $c_{i}$ were used and the age(which is an attribute of CelebA) of face were changed. This result shows that mask vector works as intended (use information only from designated dataset).

댓글남기기